#How google search engine works code#

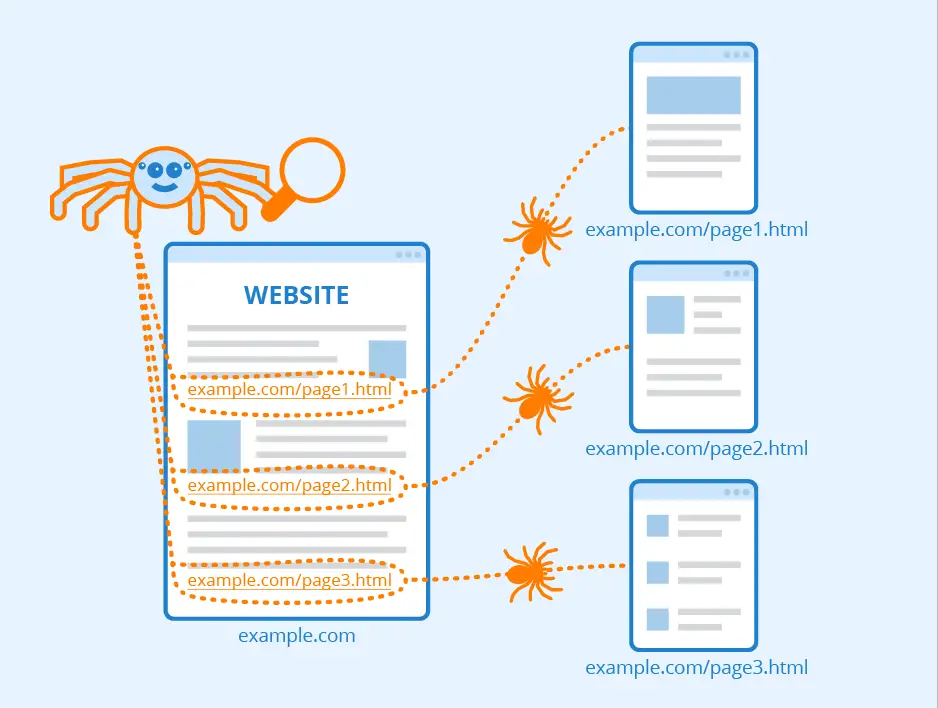

The DOM is the rendered HTML and Javascript code of the page that the crawlers look through to find links to other pages (samples shown above in red outlines). Whenever crawlers look at a web page, they look through the “Document Object Model” (or “DOM”) of the page to see what’s on it.

How about a visual example? In the figure below, you can see a screenshot of the home page of USA.gov: These website links bind together pages in a website and websites across the web, and in doing so, create a pathway for the crawlers to reach the trillions of interconnected website pages that exist. The method of travel by which the crawlers travel are website links. Search engines have crawlers (aka spiders) that “crawl” the World Wide Web to discover pages that exist in order to help identify the best web pages to be evaluated for a query. Let’s look closer at a simplified explanation of each … Crawling the Web

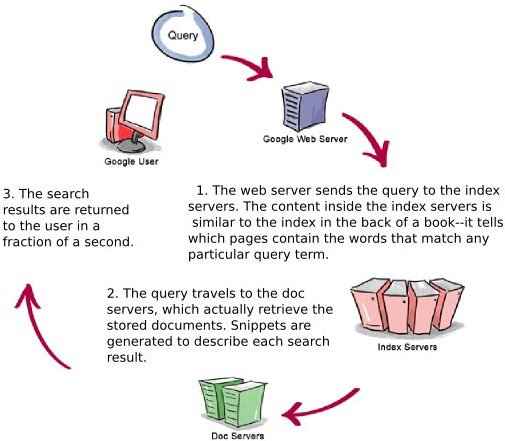

There are many pages that Google keeps out of the crawling, indexing and ranking process for various reasons. In actuality, it’s probably far more than that number. A Massive UndertakingĪt the time of writing, Google says it knows of more than 130 trillion pages on the web. While the details of the process are actually quite complex, knowing the (non-technical) basics of crawling, indexing and ranking can put you well on your way to better understanding the methods behind a search engine optimization strategy. Do you know how search engines like Google find, crawl, and rank the trillions of web pages out there in order to serve up the results you see when you type in a query?

0 kommentar(er)

0 kommentar(er)